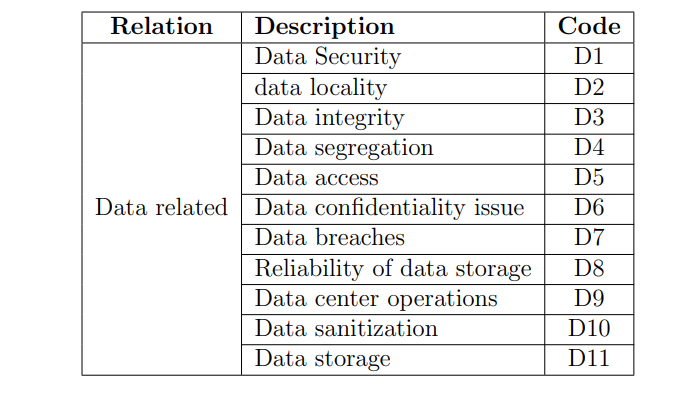

Cloud computing security is a major concern and has various challenges that need attention [165][17]. From the recent surveys on IT executives and CIOs conducted by IDC, it was clear that security was the highly cited (74%) challenge in the cloud computing field [3][85]. A comparison with grid computing systems also proves that for cloud computing security the measures are simpler and less secure [55]. Security in cloud computing is based on the cloud service provider, who is responsible for storing data and providing security [63]. Based on the data analysis process explained in the previous section, terms in literature with similar understanding (terms such as data security, data locality, etc., are categorized in data-related challenges) are grouped under 8 sections and each section is explained in detail below. These sections are grouped based on how they are explained and based on categorizations proposed by some authors in their discussions.

4.2.1 Data

Data security (D1)

Information from articles that discuss about data security and data protection are considered. Security provided by cloud SP’s might not be highly cost effective when implemented in small companies. But when two or more organizations share a common resource there is a risk of data misuse. In such situation it is required to secure data repositories [105]. Not only the data repositories but also data should be secured in any stage such as storage, transit or process [92]. Since this kind of sharing resources is prevalent in the CC scenario, protection of data is important and is the most important challenge among other CC challenges [170][134][109]. In shared areas to keep data secure is challenging than protecting in a personal computer [160][57]. This problem has begun due to the introduction of new paradigm CC [107]. The author of article [164] explains how data security effects in various service models namely SaaS, PaaS and IaaS and in the article [70] author advocates that data security is the primary challenge for cloud acceptance and author for [107] expresses that cloud data security is an issue to be taken care of. For enhanced security on data repositories it is important to provide better authentication, authorization and access control for data stored on CC in addition to on-demand computing capability [63][28][133].

Given below are three key areas in Data security that CC refers to [169]:

- Confidentiality: When enterprise data is stored outside organizational boundaries it needs to be protected from vulnerabilities. To protect data from vulnerabilities, employees must adopt security checks to ensure that their data stays protected from malicious attacks [10][2][58]. Few tests are used to help organizations to assess and validate, to which extent data is protected from the malicious user and they are as follows [144][26][178][154]:

(a) Cross-site scripting [XSS]

(b) Access control weaknesses

(c) OS and SQL injection flaws

(d) Cross-site request forgery [CSRF]

(e) Cookie manipulation

(f) Hidden field manipulation

(g) Insecure storage

(h) Insecure configuration

Example: With the help of the Payment Card Industry Data Security Standard (PCI DSS) the data is not allowed to go outside the European Union [144][26][154]. This can also enforce encryption on certain areas of data and by encrypting data in this way permission is given only to specific users to access specific areas of data [119].

- Integrity: There is no common policy that exists for data exchange. To maintain the security of client data, thin clients are used where only a few resources are possible. Since only a few resources are given access users are not suggested to store any personal data such as passwords. Since passwords are not stored on desktops, passwords cannot be stolen by anyone. The integrity of data can be further assured by [10]:

• Using some extra features which are like unpublished APIs for securing a particular section of data.

• Using DHCP and FTP for a long time has been rendered insecure. - Availability: Availability is the most problematic issue, where several companies face downtime (i.e., denial of service attack) as a major issue. The availability of service generally depends on the contract signed between the client and vendor. Some other points that need to be highlighted when it comes to data security [28]:

• Who has rights over data (i.e., does data still belong to the company?)

• If any other company or organization is being involved (i.e., is there involvement of any third party organization) [10][78].

• Customers using CC applications need to check if the data provided by cloud service providers is carried out lawfully or not.

• If data protection fails while data is being processed, it could result in administrative, criminal sanctions, or civil type of issues (which depend on the country controlling data). These issues may occur due to multi transfers of data logs between federated cloud providers. - Cryptographic algorithm should be maintained well and updated regularly, failing to do so could lead in disclosing personal data [169].

- Data is not completely protected when it is encrypted and stored. When searching for a piece of information again in CC servers care should be taken to retrieve information in a secure process. Traditional searches can disclose data to other companies/individuals [156]. Not only this but also using complex ways to encrypt can also raise issues while retrieving data from storage [126].

Data locality (D2)

Information from articles that discuss data locality, jurisdictional issues, risk of seizure, and loss of governance are considered. Using CC applications or storage services questions such as “does CSP allows to control the data location?” arise and the reason for asking this question is explained in this section [123]. We know that in CC the data can be hosted anywhere and in most cases, the customer does not know the location of his data i.e., the data is generally distributed over several regions [110][85][100]. It is also known that when the geographical location of data changes the laws governing that data also change. This clarifies that the user’s data (information, applications, etc.,) that is stored in cloud computing (distributed over several regions) is affected by the compliance and data privacy laws of that country (whichever country the user’s data is located). So the customer must be informed about the location of his data stored in the cloud [144]. SP can provide the location of data whenever there is a change or if the SP provides a mechanism to track the location of data it can be very helpful for customers [110]. If the customer shows any concerns about the location of data they should be dealt with immediately [142]. This is because if the customer is found violating the laws of a certain territory his/her data can be seized by the government. Since all the information stored in cloud computing architecture is in the same data repository, there is always a chance for the government to seize or compromise the data of another company [46]. Hence, before storing data on the cloud, users must ensure providers whether data are stored keeping jurisdiction constraints in consideration or not. They must also verify existing contractual commitments which can show agreement to local privacy requirements [86][22][119][72]. For example, Some countries like Europe, and South Africa do not let their data leave country borders, as information is potential and sensitive [144] [109]. Because of all these problems, some customers are also concerned that their data should stay in the same geographical locations in which they are [7]. In [55], the author mentions that clouds will face significant challenges to handle Cloud applications while managing the data locality of applications and their access patterns. In addition to this, the author in [105] is also concerned regarding the physical protection that changes from one data center to another data center.

Data integrity (D3)

If a system maintains integrity, its assets can only be modified by authorized parties or in authorized ways. This modification could be on software or hardware entities of the system [179]. Data integrity in any isolated system (with a single database) can be maintained via database constraints and transactions. But in a distributed environment, where databases are spread out in multiple locations data integrity must be maintained correctly, to avoid loss of data [99][100]. For example, when the premises application is trying to access or change data on a cloud the transaction should be complete and data integrity should be maintained, and failing to do so can cause data loss [75]. In general, every transaction has to follow ACID properties (Atomicity, Consistency, Isolation, and Durability) to preserve data integrity [179][46][130][19]. This data integrity verification is one of the key issues in cloud data storage, especially in the case of an untrusted server [120][81].

Web services face problems with transaction management frequently as it still uses HTTP services. This HTTP service does not support transactions or guarantee delivery. The only way to handle this issue is by implementing transaction management at Application Programming Interface (API) level. There are some standards (such as WS-Transaction and WS-Reliability) to manage data integrity with web services exists. But since these standards are not matured they are not implemented. The majority of vendors who deal with SaaS, expose their web service API without any support for transactions. Additionally, each SaaS application may have multiple levels of availability and SLAs (Service Level Agreements), complicating it further with the management of transactions and data integrity across multiple SaaS applications.

Lack of data level integrity controls could result in some profound problems. Architects and developers need to handle this carefully, to make sure that database’s integrity is not compromised when shifting to cloud computing applications [144]. Failing to check data integrity may lead to data fabrication or in some cases even if data is removed by CSP as its rarely accessed, the user won’t be known until he attempts to access it [153]. In article [98] the author compares protocols that are used for remote data integrity and express that the protocols in comparison were either focused on single-server scenario or multi-server but not in a dynamic situation such as CC. In the article [126], the author expresses concern regarding maintaining a local copy to check the data integrity of each user. CSPs are supposed to have some user metadata to grant access or identify a user. To manage the integrity of data in cloud storage metadata should be managed correctly [31].

The author in [120] has performed a comparison of different protocols (entire data dependent tag, data block dependent tag, data independent tag-based, data replication-based protocol) to check data integrity that is discussed and compared. The comparison shows most proposed methods are having data integrity as a primary objective and also support dynamic operations in cloud storage. But there is room for improving these methods.

Data segregation (D4)

Another issue in cloud computing is multi-tenancy. Since multi-tenancy allows multiple users to store data on cloud servers using different built-in applications at a time, various users’ data resides in a commonplace. This kind of storage shows a possibility for data intrusion. Data can be intruded on (malicious users retrieving or hacking into others’ data) by using some application or injecting a client code [144][72]. The user should ensure that data stored in the cloud should be separated from other customers’ data [55][85][123]. Article [142] suggests that an encryption scheme used should be assessed and certified that they are safe and cloud providers should use only standardized encryption algorithms and protocols.

Vulnerabilities with data segregation can be detected or found using the following test [144]:

- SQL injection flaws

- Data validation

- Insecure storage

Data access (D5)

Information from articles that discuss about data access, access rights, privileged user access, access control, administrative access are considered. This issue mainly relates to security policies. Policies are described as “Conditions necessary to obtain trust, and can also prescribe actions and outcomes if certain conditions are met” [121]. Every organization has their own security policies. Based on these policies employee will be given access to a section of data and in some cases employees might not be given a complete access. While giving

access it is necessary to know which piece of data is accessed by which user [11][109]. And for this various interfaces or encryption techniques are used and keys are shared with only authorized parties. Wrong management of keys can also cause difficulty in providing security. To prevent wrong management of keys access control list might be used, but with increase in the number of keys, the complexity of managing keys also increases [95]. Even in the case of interfaces used to manage security, if the number of interfaces increase management of access can also become complicated [166].

In any case, to ensure that data stays away from unauthorized users the security policies must be strictly followed [144][72]. The unauthorized access could be from an insider or by any user trying to access CC [136]. The data access control is a specific issue and various standalone approaches used in access control of data in CC are mentioned in article [45]. Privileged user access: Since access is given through the Internet, giving access to privileged users is an increasing security risk in cloud computing. When sensitive data is transferred through the Internet there is a possibility for an unauthorized user to gain access and control data. To avoid this, the user must use data encryption and additional protection mechanisms like one time password protection or multi-factor authentication, that can be used to provide strong authentication and encrypted protection for all administrative traffic [3][86][22].

Data confidentiality issue (D6)

Cloud computing allows users to store their own information on remote servers, which means content such as user data, videos, tax preparation chart etc., can be stored with a single cloud provider or multiple cloud providers. When users store their data in such servers, data confidentiality is a requirement [170]. Storing of data in remote servers also arises some privacy and confidentiality issues among individual, business, government agency, etc., some of these issues are mentioned below [144][76]:

- Privacy of personal information and confidentiality of business and government information implications is significant in CC.

- The terms of service and privacy policy established by cloud provider are the key factors that vary user’s privacy and confidentiality risks significantly.

- Privacy and confidentiality rights, obligations and status may change when a user discloses information to cloud provider based on information type and category of CC user.

- The legal status of protections for personal or business information may be greatly affected by disclosure and remote storage.

- Location of information may have considerable effects on the privacy and confidentiality information protection and also on privacy obligations for those who process or store information.

- Information in cloud may have multiple legal locations at the same time but with differing legal consequences.

- A cloud provider can examine user records for criminal activity and other matters according to law.

- Access to the status of information on cloud along with privacy and confidentiality protections available to users would be difficult due to legal uncertainties.

In addition to these to maintain confidentiality understanding data and its classification, users being aware of which data is stored in the cloud and what levels of accessibility govern that piece of data should also be known [160].

Data Breaches (D7)

Since data from various users and organizations is stored in a cloud environment, if user with malicious intent enter the cloud environment, the entire cloud environment is prone to a high value target [144][166]. A breach can occur due to accidental transmission issues (such breaches did happen in Amazon, Google CC’s) or due to an insider attack [100][143]. In any case of breach data is compromised and is always a security risk which is also a top threat mentioned by CSA [143]. There is a high requirement for breach notification process available

in the cloud. It is because if breaches are not notified the cloud might not be able to notify serious attacks [90].

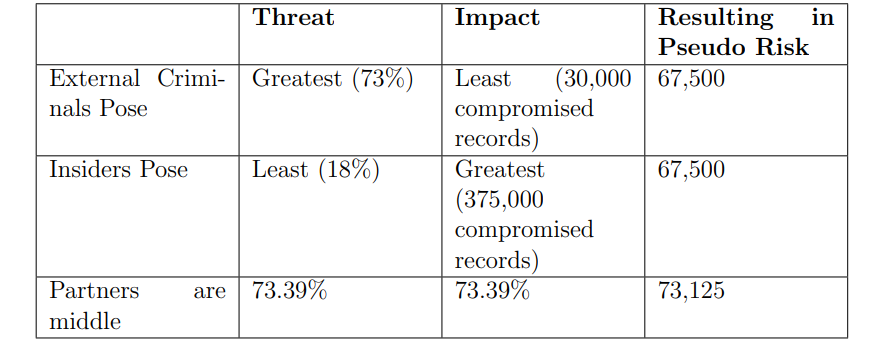

Table 4.1: Business breach report blog

The business breach report blog gives information on the impact of breaches [144], as shown in table 4.1. We can see that the threat of external criminals is greatest by 73% but with least compromised records. On the other hand threat of insiders is least with 18% but the impact they cause is greatest.

Reliability of data storage (D8)

As long as there exists no problems with virtualization manager, developer will have better control over security. The virtual machines have many issues within it, but it still a good solution for providing secure operation in CC context. With growing virtualization in every aspect of cloud computing, there is an issue with reliability of data storage and owner retaining control over data, regardless of its physical location [144]. The users also think that storage mechanisms are not reliable in CC [33]. In CC, reliability of data storage is a general issue [99]. This issue constitutes to every data entity stored in the cloud and when Infrastructure as a service (IaaS) is considered the service provider is expected to assure that

an organization’s data is kept secured along its life cycle (even after the user has removed his account) [38]. Another issue to be considered here is that even a virtual machine needs to be stored in a physical infrastructure. This can also cause security risks, which needs to be protected [125][164]. In addition to these problems article [126] explains various storage concerns and show to what extent cloud can be dependable with respect to reliability of data storage.

Data center operations (D9)

Information from articles that discuss about losing control over data, incident response, data center operational management, data center operations, data management, disaster recovery, data transfer bottlenecks, incident response are grouped her.

Organizations using cloud computing applications are concerned about protecting data while it’s being transferred between the cloud and the business. The concern is about what will happen to user’s data if something happens to cloud storage? [162]. If data is not managed properly, data storage and data access can become an issue [15]. In article [54] author expresses his concern that though there is growing interest in cloud governance issues such as data integration, data consistency, policy management etc., are not given required attention. Adding to this [178] mention that cloud is not secure unless mechanisms to debug, diagnose distributed queries and analyze exists for the cloud providers.

Explanation on issues related to data center operation are discussed below:

Recovery: In case of disaster, the cloud providers should be answerable to users questions, such as what happened to data stored in the cloud? If the cloud service provider does not replicate data across multiple sites, such system could result in failure under certain circumstances (if disaster’s occur)[119][10][162]. Even though there is a disaster in cloud it should be able to recover or provide some other means to avoid halting in the business needs of user [65]. Therefore, service providers can be questioned “is there any option for complete restoration if exists some way and how long does it take?” [119]. In case of PaaS, disaster and recovery are issues that need to be paid more attention and in IaaS data center construction is a key point [164]. Losing control over data: Data is outsourced, control of data will be lost gradually. To avoid major loss there should be transparency in how data is managed and how data is accessed [142]. As a solution to this, Amazon Simple Storage Service S3 API’s, provide both object level access control and bucket level control. Each authenticated user under this kind of security in a system is authorized to perform certain actions, specific to each object which he/she needs access to perform his/her task. In case of bucket level control the authorization is granted at the level of bucket (is a container for objects stored in Amazon S3).

This security can be accessed at both object and bucket level [46]. Data transfer bottleneck: If potential consumer transfers data across the cloud boundaries, it might lead to data transfer cost. To reduce cost, when CC applications are used cloud user and cloud providers need to focus on the implication of data placement and data transfer at every level. During amazon development, ship disk was used to overcome this issue with Data transfer bottleneck [13][12].

Data sanitization (D10)

Information from articles that deal about data sanitization and insecure destruction or incomplete data deletion are considered. It is the process of removing unwanted or outdated sensitive data from the storage device. When a user updates data in the cloud, he/she can secure the data by encrypting while storing data on the cloud. Users are very much concerned

about what will happen to the data after it passes its user’s “use by date” date, will it be deleted after the contract is completed? [69][46][78][7]. Even if the data has to be deleted or no longer needed should be deleted in a secure way such a way that unauthorized access is not possible [126][100][142]. It is also a benefit if the user is kept informed how his data is deleted (if asked for deletion) and also helps user keep informed if the service provider is keeping the data even if asked for deletion [119]. Amazon Web Services (AWS) procedure includes a decommissioning process when the storage device reached to the end of useful life. It also means that the user data is not exposed to unauthorized users. Sanitization is also applied to backup the data for recovery and restoration of service [78]. While performing an anti-malware scan identifying useful piece of data and deleting unwanted information can become complicated [138].

Example: Researchers are obtaining data from online auction and other sources. They also extract data by retrieving large amounts of data from them (with proper equipment, we can recover the data from the failed drives which are not disposed by cloud providers) [78].

Data storage and lock-in (D11)

Information from articles that deal about data storage, where data is stored?, problems with DBMS and data lock-in are considered.

Data storage is a concern in CC [54]. There are a number of data storage concerns expressed by different authors and as follows:

• Is the data secure? Will data be available when requested? [136][92].

• Authors of [90][5][142][162][17][110] have mentioned data loss or leakage as a challenge, concern or issue in their articles.

• Many doubts such as how data is stored, where is it stored (is it distributed in various places?), what will happen if the cloud provider is taken over, what security measures are taken to protect the user’s data etc.,

• Since a large amount of data is stored in cloud it can attract attention from malicious users [166]. Due to this user might not be interested to store mission critical data for processing or storing into CC [55]. Some others feel that data stored in CC as insecure and not reliable [33][108].

• Customer lock-in might be attractive for service providers, but it is an issue (viz., vulnerable to increase in price, reliability problem, etc.,) for customers [13][12][92][110]. As an example customers of ‘Coghead’ were forced to re-write their applications on another platform when its cloud computing platform got shut down [35].

• It’s a known fact that different organization’s data is stored in a place and this increases the possibility that an organization data is sold to another organization for money. The author wants to bring it to notice that since all the data is stored in the same place there are increased chances of data misuse, data can be intentionally leaked and if this happens the customer

is at loss [100][133].

• The author of [142] mentions that data loss/leakage issue affects only public cloud.

The issues with data storage in CC can arise when proper sanitization or segregation of data is not implemented, which could result in users not able to extract their data from repositories when necessary or when company willing to shift data to another location. Data storage: With cloud computing users can utilize a wide variety of flexible online storage mechanisms to store their information, which have been known as computing and storage “clouds”. Examples are Amazon S3, Nirvanix CloudNAS and Microsoft SkyDrive [26]. The architecture of storage mechanism also depends on cloud type e.g., internal or external cloud computing and types of services i.e., SaaS, PaaS or IaaS. This variation exists because in internal cloud computing organization keeps all data within its own data center but in case of external cloud computing data is outsourced to CSP [132]. In any of these cases the

data is not under the control (physical or logical state) of user and traditional cryptography can not be used by user [68][95][164]. Since in CC data is stored in a remote location and even traditional cryptographic algorithms cannot be applied the security of data stored in remote locations is a huge concern in cloud computing [153].

Users while accessing flexible storage mechanisms can maintain a local trusted memory, use a trusted cryptographic mechanism and upload data into the cloud (by doing this the user need not have to trust the cloud storage provider); to verify the integrity of data user can have a short hash of in local memory and authenticate server responses by re-calculating the hash of received data [26]. To create trust in cloud storage, data storage systems need to fulfill different requirements such as maintaining user’s data, high availability, reliability, performance, replication and data consistency; but since these requirements are all interrelated and conflicting no system has implemented all of these at once. DaaS providers facilitate storage as a service by implementing one feature over other, which is mentioned by its customers in Service Level Agreements (SLA) [168]. To assure security of information, CSPs should take care to protect the data not only when it’s stored but when its under transmission [31][123]. In article [11], author mentions storage devices should support different storage patterns. In addition to this various other concerns in relation to data storage specific to IaaS are explained in [38]. To have a better security in cloud storage long term storage correctness and remote detection of hardware failure is suggested [126].

Table 4.2: Cloud computing challenges related to data

——————————————————————————————————————–

Infocerts, 5B 306 Riverside Greens, Panvel, Raigad 410206 Maharashtra, India

Contact us – https://www.infocerts.com