Searching Logs with Athena

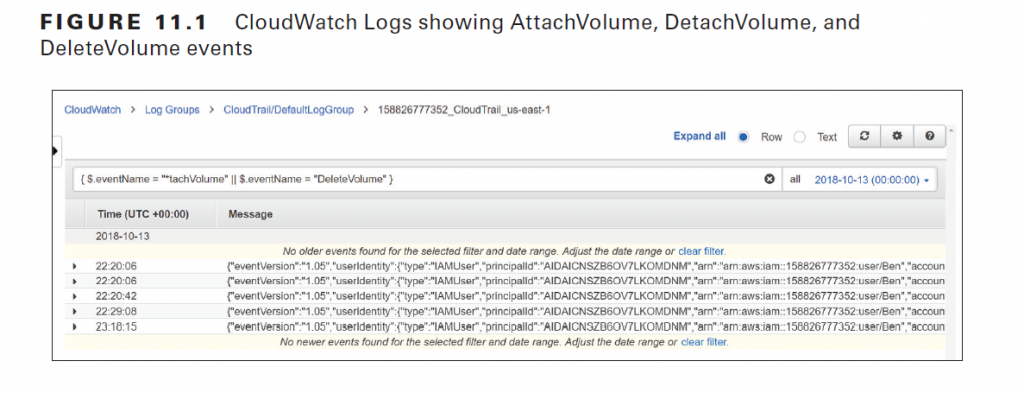

AWS uses S3 to store various logs, including CloudTrail logs, VPC flow logs, DNS query logs, and S3 server access logs. Athena lets you use the Structured Query Language (SQL) to search data stored in S3. Although you can use CloudWatch Logs to store and search logs, you can’t format the output to show you only specific data. For example, suppose you use the following filter pattern to search for all DetachVolume, AttachVolume, and DeleteVolume events in a CloudWatch log stream containing CloudTrail logs:

{ $.eventName = “*tachVolume” || $.eventName = “DeleteVolume” }

CloudWatch Logs will display each matching event in its native JSON format, which as you can see in Figure 11.1 is inherently difficult to read.

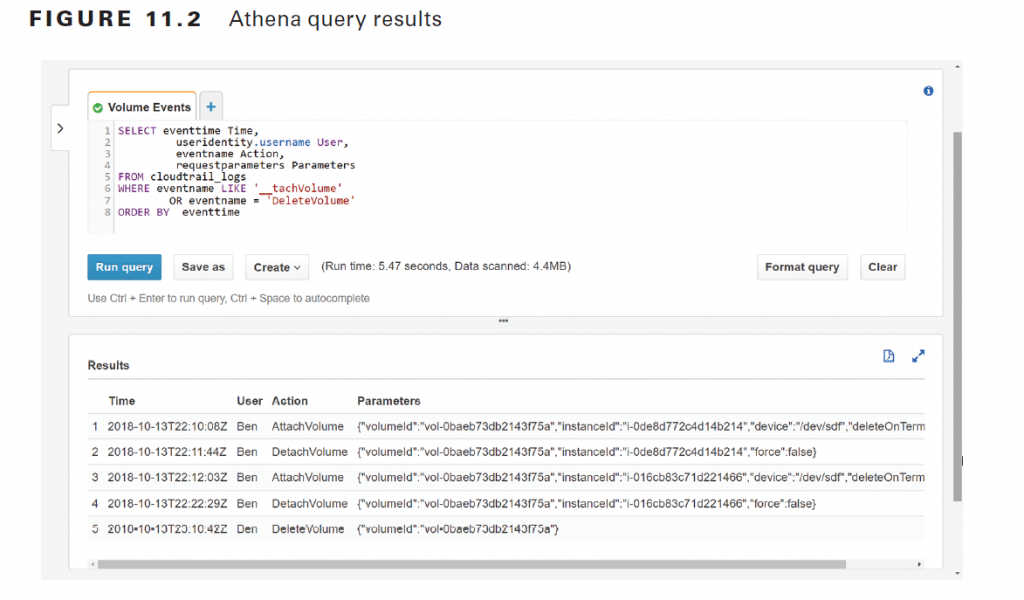

Additionally, you may not be interested in every property in the JSON document and want to see only a few key elements. Amazon Athena makes it easy to achieve this. Once you bring data into Athena from S3, you can query it using SQL, sort it, and have Athena display only specific columns, as shown in Figure 11.2.

Because Athena uses SQL, you must define the structure of the data by using a CREATE TABLE Data Definition Language (DDL) statement. The DDL statement maps the values in the source file to columns in a table. AWS provides DDL statements for creating tables to store Application Load Balancer logs, CloudTrail logs, CloudFront logs, and VPC flow logs.

You can import multiple logs into a single Athena database and then use SQL JOIN statements to correlate data across those logs. The data formats that Athena supports include the following:

■ Comma-separated values (CSV) and tab-separated values (TSV)

■ JavaScript Object Notation (JSON)—including CloudTrail logs, VPC flow logs, and DNS query logs

■ Apache ORC and Parquet—storage formats for Apache Hadoop, which is a framework for processing large data sets

Auditing Resource Configurations with AWS Config

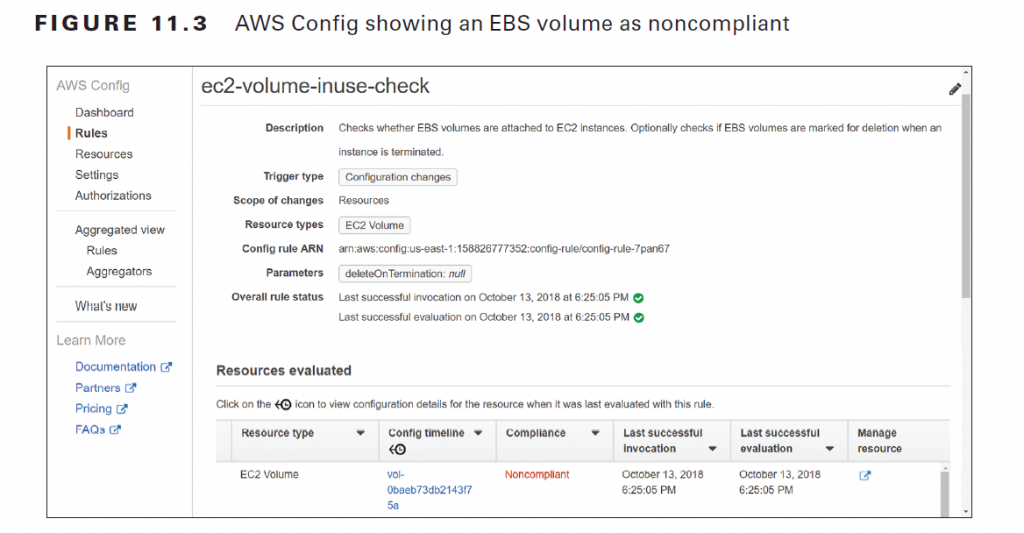

In addition to monitoring events, your overall security strategy should include monitoring the configuration state of your AWS resources. AWS Config can alert you when a resource configuration in your AWS account changes. It can also compare your resource configurations against a baseline and alert you when the configuration deviates from it, which is useful for validating that you’re in compliance with organizational and regulatory requirements. To compare your resource configurations against a desired baseline, you can implement AWS Config rules. In a busy AWS environment where configuration changes are occurring frequently, it’s important to determine which types of changes require further investigation. AWS Config Rules let you define configuration states that are abnormal or suspicious so that you can focus on analyzing and, if necessary, remediating them. AWS offers a variety of managed rules to cover such scenarios. For example, the ec2-volume-inuse-check rule looks for EBS volumes that aren’t attached to any instance. With this rule in place, if you create an EBS volume and don’t attach it to an instance, AWS Config will report the volume as noncompliant, as shown in Figure 11.3.

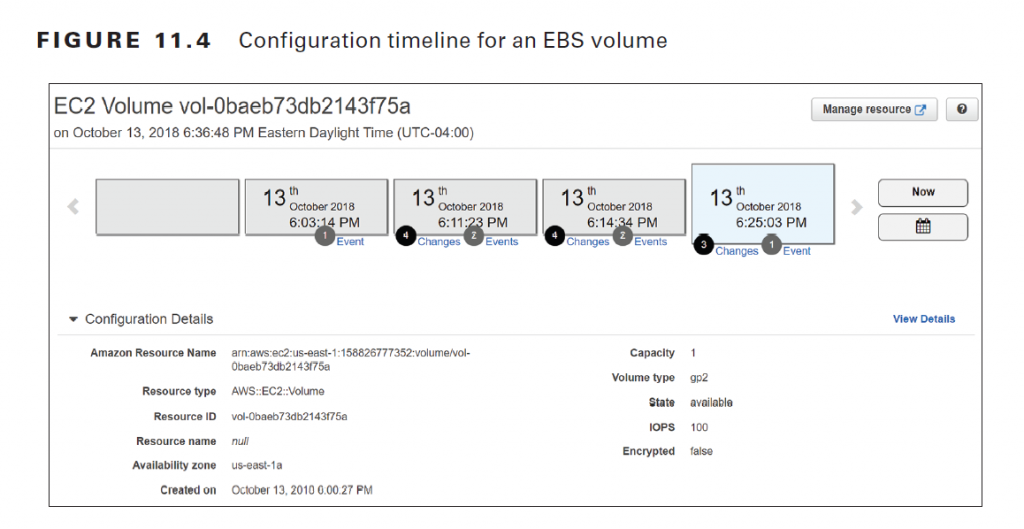

Note that the rule only gives the current compliance status of the resource. Suppose that an IT auditor needed to see which EC2 instances this EBS volume was previously attached to. Although you could derive this information from CloudTrail logs, it would be difficult and time-consuming. You’d have to carefully sift through the CloudTrail logs, paying attention to every AttachVolume and DetachVolume operation. Imagine how much more cumbersome this process would be if you had to do this for hundreds of instances! Instead, you could simply use AWS Config to view the configuration timeline for the resource, as shown in Figure 11.4.

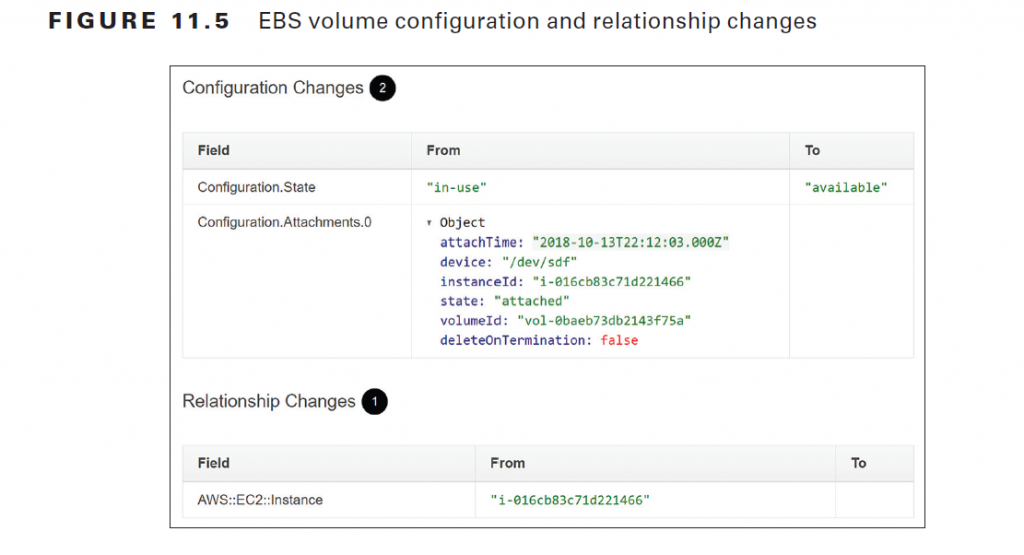

The configuration timeline shows a timestamp for each time the EBS volume was modified. You can click any box in the timeline to view the specific API events that triggered the configuration change, the before-and-after configuration details, and any relationship changes. The latest configuration and relationship changes, shown in Figure 11.5, reveal that the volume was attached to an instance and was then subsequently detached. Note that the act of detaching the volume from an instance placed the volume out of compliance with the ec2-volume-inuse-check rule.

Amazon GuardDuty

Amazon GuardDuty analyzes VPC flow logs, CloudTrail management event logs, and Route 53 DNS query logs, looking for known malicious IP addresses, domain names, and potentially malicious activity. You do not need to stream any logs to CloudWatch Logs for GuardDuty to be able to

analyze them. When GuardDuty detects a potential security problem, it creates a finding, which is a notification that details the questionable activity. GuardDuty displays the finding in the GuardDuty console and also delivers the finding to CloudWatch Events. You can configure an SNS notification to send an alert or take an action in response to such an event. Findings are classified according to the ostensible purpose of the threat, which can be one of the following

finding types:

Backdoor This indicates an EC2 instance has been compromised by malware that can be used to send spam or participate in distributed denial-of-service (DDoS) attacks. This finding may be triggered when the instance communicates on TCP port 25 or when it resolves the domain name of a known command-and-control server used to coordinate DDoS attacks.

Behavior This indicates an EC2 instance is communicating on a protocol and port that it normally doesn’t or is sending an abnormally large amount of traffic to an external host.

Cryptocurrency An EC2 instance is exhibiting network activity indicating that it’s operating at a Bitcoin node. It may be sending, receiving, or mining Bitcoin.

Pentest A system running Kali Linux, a popular Linux distribution used for penetration testing, is making API calls against your AWS resources.

Persistence An IAM user with no prior history of doing so has modified user or resource permissions, security groups, routes, or network access control lists.

Recon This indicates a reconnaissance attack may be underway. Behavior that can trigger this finding may include a host from a known malicious IP address probing an EC2 instance on a port that’s not blocked by a security group or network access control list. Reconnaissance behavior can also include a malicious IP attempting to invoke an API call against a resource in your AWS account. Another trigger is an IAM user with no history of doing so attempting to enumerate security groups, network access control lists, routes, AWS resources, and IAM user permissions.

ResourceConsumption This indicates that an IAM user has launched an EC2 instance, despite having no history of doing so.

Stealth A password policy was weakened, CloudTrail logging was disabled or modified, or CloudTrail logs were deleted.

Trojan An EC2 instance is exhibiting behavior that indicates a Trojan may be installed. A Trojan is a malicious program that can send data from your AWS environment to an attacker or provide a way for an attacker to collect or store stolen data on your instance. UnauthorizedAccess Indicates a possible unauthorized attempt to access your AWS resources via an API call or console login. This finding type may also indicate someone attempting to brute force a secure shell (SSH) or Remote Desktop Protocol (RDP) session. Notice that every finding type relates to either the inappropriate use of AWS credentials

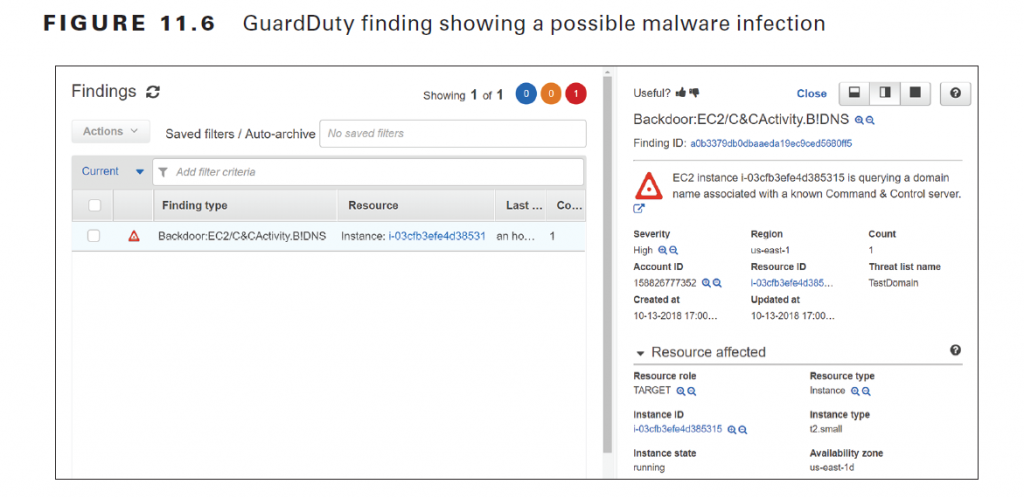

or the presence of malware on an EC2 instance. For example, in Figure 11.6, GuardDuty has detected network activity indicative of malware attempting to communicate with a command-and-control server, which is a server run by an attacker used to coordinate various types of attacks.

Remediating this finding might involve identifying and removing the malware or simply terminating the instance and creating a new one.

In the case of the potentially inappropriate use of AWS credentials, remediation would include first contacting the authorized owner of the credentials to find out whether the activity was legitimate. If the credentials were used by an unauthorized party, then you would immediately revoke the compromised credentials and issue new ones.

Amazon Inspector

Amazon Inspector is an agent-based service that looks for vulnerabilities on your EC2 instances. Whereas GuardDuty looks for security threats by inspecting network traffic to and from your instances, the Inspector agent runs an assessment on the instance and analyzes its network, filesystem, and process activity. Inspector determines whether any threats or vulnerabilities exist by comparing the collected data against one or more rules packages. Inspector offers five rules packages:

Common Vulnerabilities and Exposures Common Vulnerabilities and Exposures (CVEs) are common vulnerabilities found in publicly released software, which includes both commercial and open source software for both Linux and Windows.

Center for Internet Security Benchmarks These include security best practices for Linux and Windows operating system configurations.

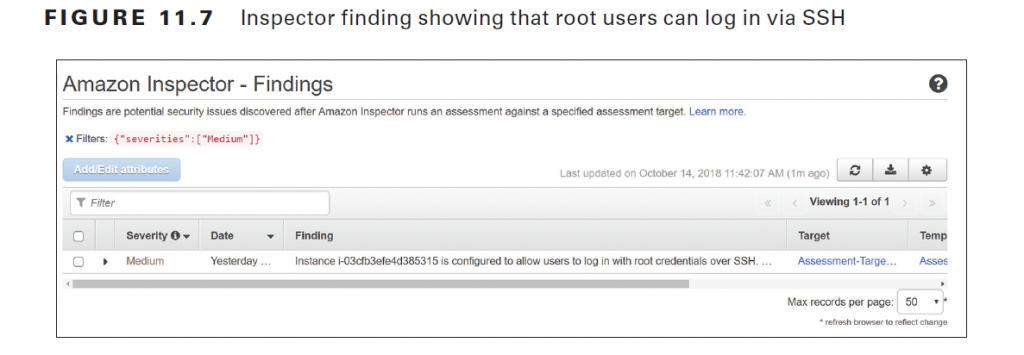

Security Best Practices These rules are a subset of the Center for Internet Security Benchmarks, providing a handful of rules against Linux instances only. Issues that these rules look for include root access via SSH, lack of a secure password policy, and insecure permissions on system directories.

Runtime Behavior Analysis This detects the use of insecure client and server protocols, unused listening TCP ports, and, on Linux systems, inappropriate file permissions and ownership.

Network Reachability These rules detect network configurations that make resources in your VPC vulnerable. Some examples include having an instance in a public subnet or running an application listening on a well-known port.

After an assessment runs, Inspector generates a list of findings classified by the following severity levels:

■ High—The issue should be resolved immediately.

■ Medium—The issue should be resolved at the next possible opportunity.

■ Low—The issue should be resolved at your convenience.

■ Informational—This indicates a security configuration detail that isn’t likely to result in your system being compromised. Nevertheless, you may want to address it depending on your organization’s requirements.

Note that the high, medium, and low severity levels indicate an issue is likely to result in a compromise of the confidentiality, integrity, or availability of information. Figure 11.7 shows a finding with a severity level of medium.

Also read this topic: Introduction to Cloud Computing and AWS -1

Once you resolve any outstanding security vulnerabilities on an instance, you can create a new AMI from that instance and use it going forward when provisioning new instances.

Protecting Network Boundaries

The network can be the first line of defense against attacks. All AWS services depend on the network, so when designing your AWS infrastructure, you should consider how your AWS resources will need to communicate with one another, with external resources, and with users. Many AWS services have public endpoints that are accessible via the Internet. It’s the responsibility of AWS to manage the network security of these endpoints and protect them from attacks. However, you can control network access to and from the resources in your VPCs, such as EC2 instances, RDS instances, and elastic load balancers.

Network Access Control Lists and Security Groups

Each resource within a VPC must reside within a subnet. Network access control lists define what traffic is allowed to and from a subnet. Security groups provide granular control of traffic to and from individual resources, such as instances and elastic load balancer listeners. You should configure your security groups and network access control lists to allow traffic to and from your AWS resources using only the protocols and ports required. If your security requirements call for it, you may also need to restrict communication to specific IP address ranges. Consider which of your resources need to be accessible from the Internet. A VPC must have an Internet gateway for resources in the VPC to access the Internet. Also, each route table attached to each subnet must have a default route with the Internet gateway as a target. If you’re running a multitier application with instances that don’t need Internet access—such as database servers—consider placing those in a separate subnet that permits traffic to and from the specific resources that the instance needs to communicate with. As an added precaution, ensure the route table the subnet is associated with doesn’t have a default route.

AWS Web Application Firewall

The Web Application Firewall (WAF) monitors HTTP and HTTPS requests to an application load balancer or CloudFront distribution. WAF protects your applications from common exploits that could result in a denial or service or allow unauthorized access to your application. Unlike network access control lists and security groups, which allow or deny access based solely on a source IP address or port and protocol, WAF lets you inspect application traffic for signs of malicious activity, including injection of malicious scripts used in cross-site scripting attacks, SQL statements used in SQL injection attacks, and abnormally long query strings. You can block these requests so that they never reach your application. WAF can also block traffic based on source IP address patterns or geographic location. You can create a Lambda function to check a list of known malicious IP addresses and add them to a WAF block list. You can also create a Lambda function to analyze web server logs and identify IP addresses that generate bad or excessive requests indicative of an HTTP flood attack and add those addresses to a block list.

AWS Shield

Internet-facing applications running on AWS are of necessity vulnerable to DDoS attacks. AWS Shield is a service that helps protect your applications from such an attack. AWS Shield comes in two flavors.

AWS Shield Standard Defends against common layer 3 and 4 DDoS attacks such as SYN flood and UDP reflection attacks. Shield Standard is automatically activated for all AWS customers.

AWS Shield Advanced Provides the same protection as Shield Standard but also includes protection against layer 7 attacks, such as HTTP flood attacks that overwhelm an application with HTTP GET or POST requests. To obtain layer 7 protection for an EC2 instance, it must have an elastic IP address. You also get attack notifications, forensic reports, and 24/7 assistance from the AWS DDoS response team. AWS WAF is included at no charge. Shield mitigates 99 percent of attacks in five minutes or less. It mitigates attacks against CloudFront and Route 53 in less than one second and Elastic Load Balancing in less than five minutes. Shield usually mitigates all other attacks in less than 20 minutes.

Data Encryption

Encrypting data ensures the confidentiality of data by preventing those without the correct key from decrypting and reading it. As a side effect, encryption also makes it infeasible for someone to modify the original data once it’s been encrypted. Data can exist in one of two states: at rest, sitting on a storage medium such as an EBS volume or S3 bucket, and in transit across the network. How you encrypt data differs according to its state.

Data at Rest

How you encrypt data at rest depends on where it’s stored. On AWS, the bulk of your data will probably reside in S3, Elastic Block Store (EBS) volumes, or an Elastic File System (EFS) or a Relational Database Service (RDS) database. Each of these services integrates with KMS and gives you the option of using your own customer-managed customer master key (CMK) or an AWS-managed customer master key. When you use your own CMK, you can configure key policies to control who may use the key to encrypt and decrypt data. You can also rotate, disable, and revoke keys. Using a customer-managed CMK gives you maximum control over your data. An AWS-managed CMK automatically rotates once a year. You can’t disable, rotate, or revoke it. You can view existing CMKs and create new ones by browsing to the Encryption Keys link in the IAM Dashboard. Most AWS services offer encryption of data at rest using KMS-managed keys, although a few including DynamoDB support using only AWS-managed keys. Note that enabling encryption for some services such as CloudWatch Logs requires using the AWS CLI.

S3

If your data is stored in an S3 bucket, you have a number of encryption options.

■ Server-side encryption with S3-managed Keys (SSE-S3)

■ Server-side encryption with KMS-managed keys (SSE-KMS)

■ Server-side encryption with customer-provided keys (SSE-C)

■ Client-side encryption

Remember that encryption applies per object, so it’s possible to have a bucket containing objects using different encryption options or no encryption at all. Applying default encryption at the bucket level does not automatically encrypt existing objects in the bucket but only those created moving forward.

Elastic Block Store

You can encrypt an EBS volume using a KMS-managed key. You can encrypt a volume when you initially create it. However, you cannot directly encrypt a volume created from an unencrypted snapshot or unencrypted AMI. You must first create a snapshot of the unencrypted volume and then encrypt the snapshot. When you copy a snapshot, you have the option of choosing the destination region. The key you use to encrypt the destination snapshot must exist in the destination region.

Elastic File System

You can enable encryption for an EFS filesystem when you create it. EFS encryption uses KMS customer master keys to encrypt files, and an EFS-managed key to encrypt filesystem metadata, such as file and directory names.

Data in Transit

Encrypting data in transit is enabled through the use of a Transport Layer Security (TLS) certificate. You can use the Amazon Certificate Manager (ACM) to generate a TLS certificate and then install it on an Application Load Balancer or a CloudFront distribution. Refer to “The Domain Name System and Network Routing: Amazon Route 53 and Amazon CloudFront,” for instructions on creating a TLS encryption certificate and installing it on a CloudFront distribution.

Keep in mind that you cannot export the private key for a TLS certificate generated by ACM. This means you can’t install the certificate directly on an EC2 instance or on-premises server. You can, however, import an existing TLS certificate and private key into ACM. The certificate must be valid and not expired when you import it. To use an imported certificate with CloudFront, you must import it into the us-east-1 region.

People also ask this Questions

- What is a defense in depth security strategy how is it implemented?

- What is AWS Solution Architect?

- What is the role of AWS Solution Architect?

- Is AWS Solution Architect easy?

- What is AWS associate solutions architect?

- Is AWS Solutions Architect Associate exam hard?

Infocerts, 5B 306 Riverside Greens, Panvel, Raigad 410206 Maharashtra, India

Contact us – https://www.infocerts.com