Cloud Computing and Virtualization

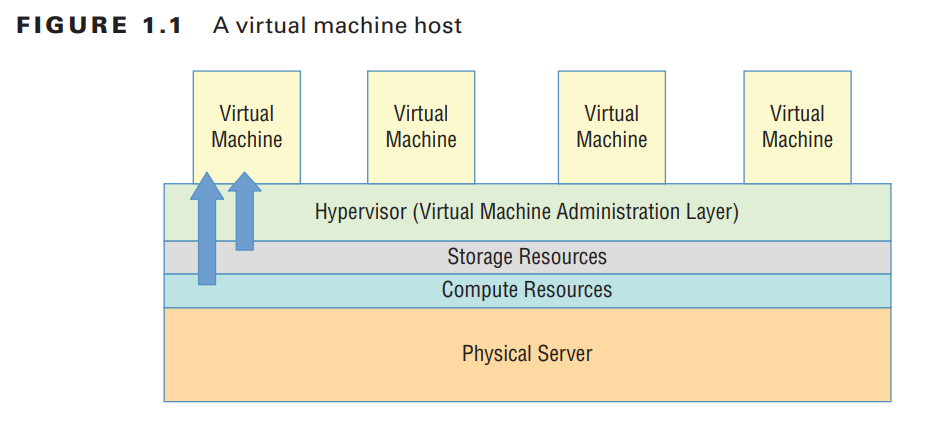

The technology that lies at the core of all cloud operations is virtualization. As illustrated in

Figure 1.1, virtualization lets you divide the hardware resources of a single physical server

into smaller units. That physical server could therefore host multiple virtual machines running their own complete operating systems, each with its own memory, storage, and network access.

Virtualization’s flexibility makes it possible to provision a virtual server in a matter

Virtualization’s flexibility makes it possible to provision a virtual server in a matter of seconds, run it for exactly the time your project requires, and then shut it down. The resources released will become instantly available to other workloads. The usage density you can achieve lets you squeeze the greatest value from your hardware and makes it easy to generate experimental and sandboxed environments.

Cloud Computing Architecture

Major cloud providers like AWS have enormous server farms where hundreds of thousands of servers and data drives are maintained along with the network cabling necessary to connect them. A well-built virtualized environment could provide a virtual server using storage, memory, compute cycles, and network bandwidth collected from the most efficient mix of available sources it can find. A cloud computing platform offers on-demand, self-service access to pooled compute resources where your usage is metered and billed according to the volume you consume. Cloud computing systems allow for precise billing models, sometimes involving fractions of a penny for an hour of consumption.

Cloud Computing Optimization

The cloud is a great choice for so many serious workloads because it’s scalable, elastic, and, often, a lot cheaper than traditional alternatives. Effective deployment provisioning will require some insight into those three features.

Also read this topic: Introduction to Cloud Computing and AWS -2

Scalability

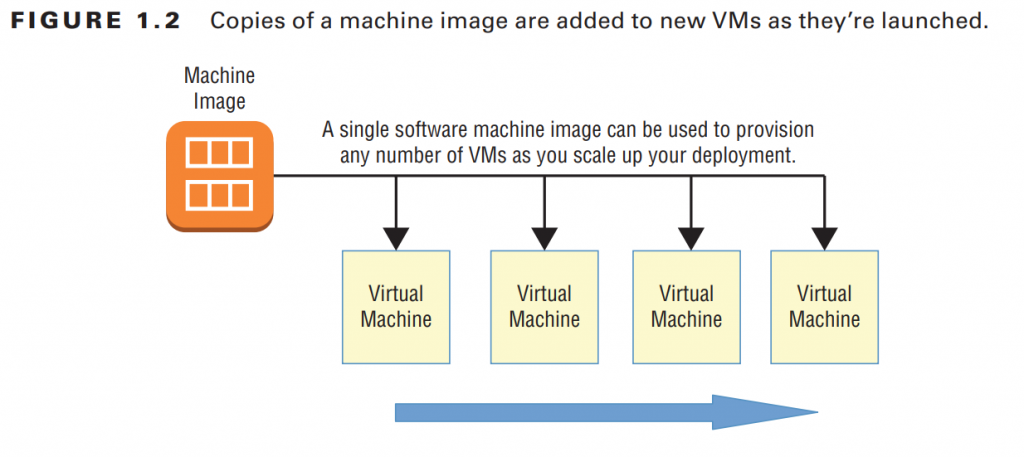

A scalable infrastructure can efficiently meet unexpected increases in demand for your application by automatically adding resources. As Figure 1.2 shows, this most often means dynamically increasing the number of virtual machines (or instances as AWS calls them) you’ve got running. AWS offers its autoscaling service through which you define a machine image that can be instantly and automatically replicated and launched into multiple instances to meet demand.

The principle of elasticity covers some of the same ground as scalability—both address managing changing demand. However, while the images used in a scalable environment let you ramp up capacity to meet rising demand, an elastic infrastructure will automatically reduce capacity when demand drops. This makes it possible to control costs, since you’ll run resources only when they’re needed.

Cost Management

Besides the ability to control expenses by closely managing the resources you use, cloud computing transitions your IT spending from a capital expenditure (capex) framework into something closer to operational expenditure (opex). In practical terms, this means you no longer have to spend $10,000 up front for every new server you deploy—along with associated electricity, cooling, security, and rack space costs. Instead, you’re billed much smaller incremental amounts for as long as your application runs. That doesn’t necessarily mean your long-term cloud-based opex costs will always be less than you’d pay over the lifetime of a comparable data center deployment. But it does mean you won’t have to expose yourself to risky speculation about your long-term needs. If, sometime in the future, changing demand calls for new hardware, AWS will be able to deliver it within a minute or two. To help you understand the full implications of cloud compute spending, AWS provides a free Total Cost of Ownership (TCO) Calculator at https://aws.amazon.com/ tco-calculator/. This calculator helps you perform proper “apples-to-apples” comparisons between your current data center costs and what an identical operation would cost you on AWS.

The AWS Cloud

Keeping up with the steady stream of innovative new services showing up on the AWS Console can be frustrating. But as a solutions architect, your main focus should be on the core service categories. This section briefly summarizes each of the core categories (as shown in Table 1.1) and then does the same for key individual services. You’ll learn much more about all of these (and more) services through the rest of the book, but it’s worth focusing on these short definitions, as they lie at the foundation of everything else you’re going to learn.

AWS Platform Architecture

AWS maintains data centers for its physical servers around the world. Because the centers are so widely distributed, you can reduce your own services’ network transfer latency by hosting your workloads geographically close to your users. It can also help you manage compliance with regulations requiring you to keep data within a particular legal jurisdiction. Data centers exist within AWS regions, of which there are currently 17—not including private U.S. government AWS GovCloud regions—although this number is constantly growing. It’s important to always be conscious of the particular region you have selected when you launch new AWS resources, as pricing and service availability can vary from one to the next. Table 1.3 shows a list of all 17 (nongovernment) regions along with each region’s name and core endpoint addresses.

AWS Reliability and Compliance

AWS has a lot of the basic regulatory, legal, and security groundwork covered before you even launch your first service. AWS has invested significant planning and funds into resources and expertise relating to infrastructure administration. Its heavily protected and secretive data centers, layers of redundancy, and carefully developed best-practice protocols would be difficult or even impossible for a regular enterprise to replicate. Where applicable, resources on the AWS platform are compliant with dozens of national and international standards, frameworks, and certifications, including ISO 9001, FedRAMP, NIST, and GDPR. (See https://aws.amazon.com/compliance/programs/ for more information.)

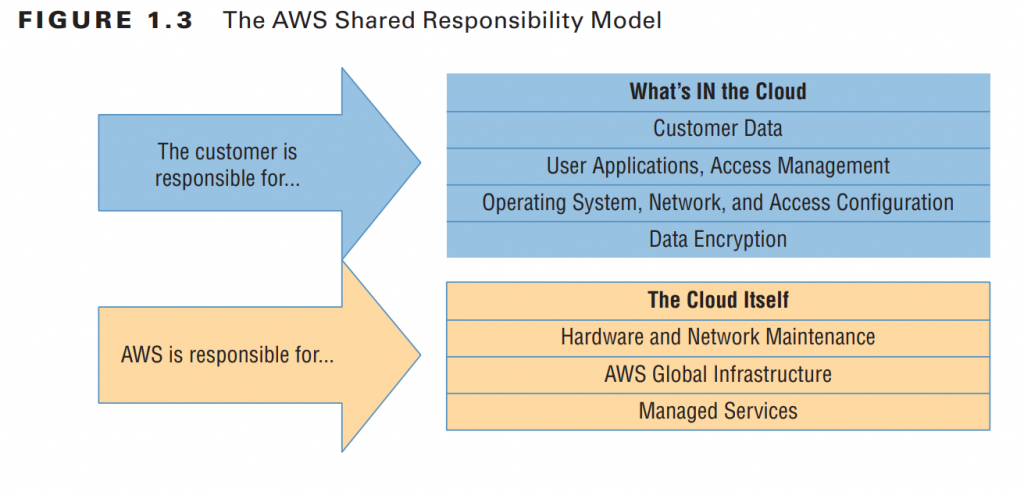

The AWS Shared Responsibility Model

Of course, those guarantees cover only the underlying AWS platform. The way you decide to use AWS resources is your business—and therefore your responsibility. So, it’s important to be familiar with the AWS Shared Responsibility Model. AWS guarantees the secure and uninterrupted operation of its “cloud.” That means its physical servers, storage devices, networking infrastructure, and managed services. AWS customers, as illustrated in Figure 1.3, are responsible for whatever happens within that cloud. This covers the security and operation of installed operating systems, client-side data, the movement of data across networks, end-user authentication and access, and customer data.

People also ask this Questions

- What is a defense in depth security strategy how is it implemented?

- What is AWS Solution Architect?

- What is the role of AWS Solution Architect?

- Is AWS Solution Architect easy?

- What is AWS associate solutions architect?

- Is AWS Solutions Architect Associate exam hard?

Infocerts, 5B 306 Riverside Greens, Panvel, Raigad 410206 Maharashtra, India

Contact us – https://www.infocerts.com